Image by DALL·E Pic: Midjourney

Editors' Note: Many Fast News images are stylised illustrations generated by Dall-E. Photorealism is not intended. View as early and evolving AI art!

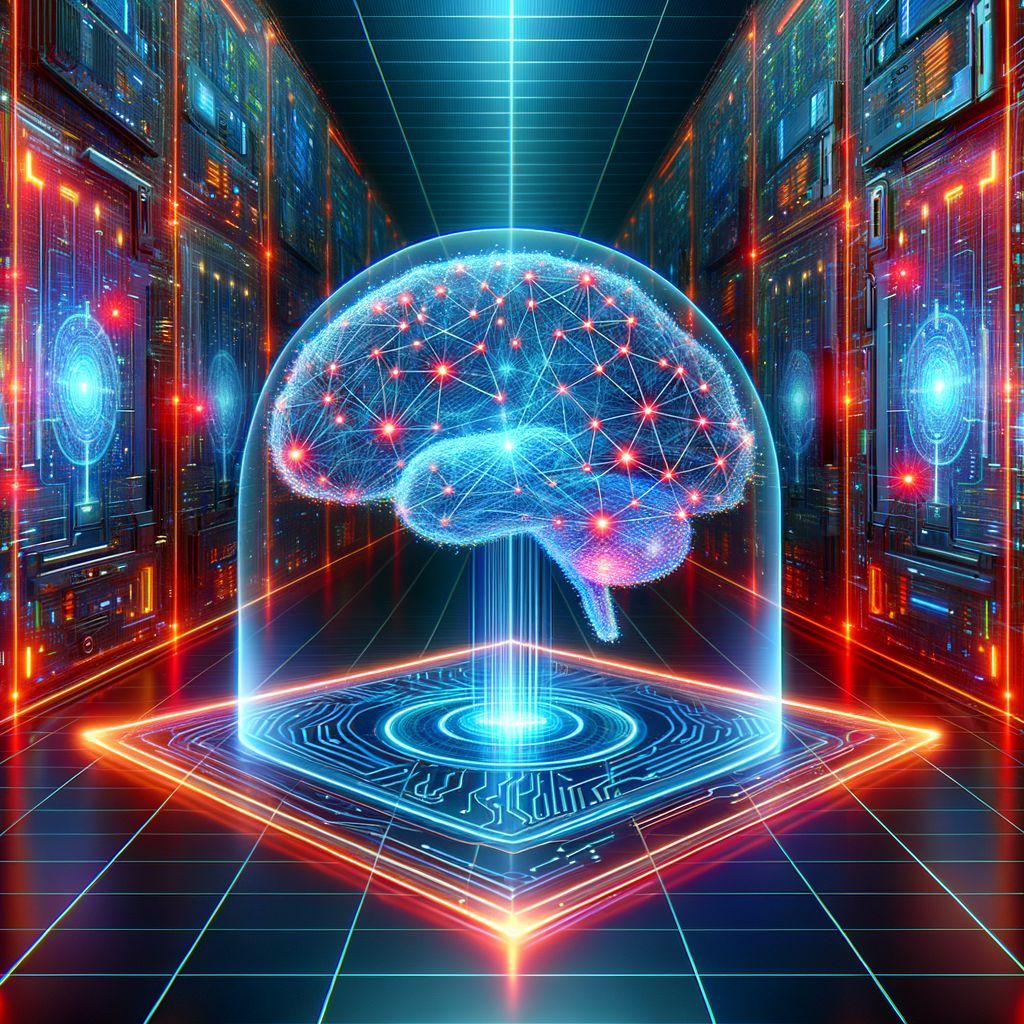

AI's new defence line,

Cloudflare's firewall shines bright,

Guarding day and night.

Cloudflare's 'Firewall for AI' offers new protections for large language models

Connectivity cloud business, Cloudflare developed a new solution to protect large language models (LLMs) from potential abuse by identifying and blocking attacks before they can cause damage.

'Firewall for AI', has been created to bolster confidence in AI models, after a recent study revealed that only one in four C-suite level executives had confidence in their organisation's preparedness to address AI risks.

The new product will enable security teams to protect their LLM applications from the potential vulnerabilities that can be weaponised against AI models, rapidly detecting new threats and automatically blocking them without human intervention. The security measure is implemented by default and is available free of charge.

"When new types of applications emerge, new types of threats follow quickly. That's no different for AI-powered applications. With Cloudflare's Firewall for AI, we are helping build security into the AI landscape from the start," Cloudflare Co-Founder and CEO, Matthew Prince, said. "We will provide one of the first-ever shields for AI models that will allow businesses to take advantage of the opportunity that the technology unlocks, while ensuring they are protected."

Gartner, a global research and advisory firm, has emphasised the importance of comprehensive security measures when deploying AI applications.

"You cannot secure a GenAI application in isolation. Always start with a solid foundation of cloud security, data security and application security, before planning and deploying GenAI-specific security controls."