Privacy and eSafety Commissioners: Curbing big tech's 'data extractivism' will improve web privacy and safety; teen social media ban may not

SXSW attendees heard that digital platforms are hiding behind "privacy" and ignoring safety concerns to protect the huge profits of their data extractive business models

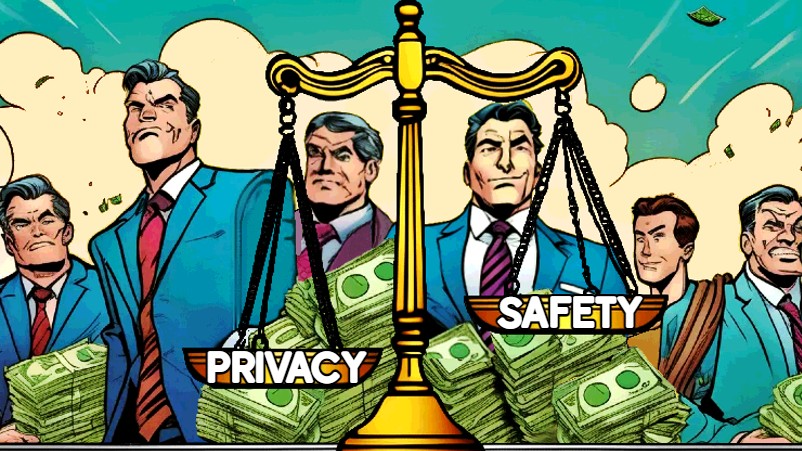

It is a mistake to pit the priorities of online safety against those of online privacy, and many of the people who do so represent the interests of giant digital platforms that promote the idea of a decentralised open web, despite having enclosed and centralised control of that same global infrastructure. That was a key message from Privacy Commissioner Carly Kind, eSafety Commissioner Julie Inman Grant, lawyer and human rights activist Lizzie O'Shea, and UNSW Criminology Professor Michael Salter at SXSW last week. The "data extractive business models" of digital giants came under heavy fire and limiting those models was described as the best way to limit privacy and safety harms by removing the economic incentives that underpin "surveillance capitalism."

What you need to know:

- The "Independence of Cyberspace" was an idea that emerged in the 1990s along with the internet itself, but the companies behind the 'platformisation' of the web seem to have little regard for the concepts of internet openness and democratisation attendees to an SXSW panel heard last week.

- Instead privacy and US-centric notions of freedom of speech conveniently dovetail with the commercial imperative to resist attempts to undermine the "data extractive" business models that underpin the big digital platforms.

- Yet, addressing incentives companies have to hoover up a vast trove of data about users is likely to best way to improve online safety and privacy.

- The harms to society such as enabling industrial-scale child sexual abuse are extreme, with as many as 300 million children around the world affected in some way.

- Research discussed on the panel revealed that 4-6 per cent of Australian men admitted to researchers they would have sexual contact with a child under the age of 12 if they knew no one would find out.

- Then there are the dangers of radicalisation from algorithms that optimise for engagement, and of people suffering serious harm from data about them that is sold via regular commercial adtech services.

- The targeting of women searching for information about abortion services, and a priest outed after information about his use of gay dating app Grindr were other examples.

- It is a false binary to pit privacy against online safety says Online Safety Commissioner Julie Inman Gran, while Privacy Commissioner Carly Kind advocated for the "fair and reasonable test" that bubbled up out of the review of the Privacy Act as a way of mitigating the excesses of "data extractive" business model.

- Kind also expressed scepticism about the efficacy of the social media ban proposed by the Commonwealth Government.

We conducted a representative survey of 2,000 Australian men. We asked them about their sexual interest in children and ... between 4 to 6 per cent of Australian men said that they would have sexual contact with a child under the age of 12 if they knew that no one would find out.

There are huge privacy gains to be made by attacking the "data extractivism" that underpins the business models of digital giants who have enclosed, privatised and centralised control of the open, democratic, and decentralised internet they claim they want to protect.

Their defence is often couched around the language of privacy protection, which they hide behind as an excuse for not acting on crimes such as child sexual abuse which could affect as many as 300 million children around the world, attendees to a SXSW panel on online safety and privacy were told.

And while she didn't make the comments about "data extractivism" herself – that came from lawyer and human rights activist Lizzie O'Shea – Australia's Privacy Commissioner Carly Kind gave a hat tip to the idea of reining-in the digital giants when she told attendees that the "fair and reasonable test" proposed in the Privacy Review would go some way to countering the worst effects of those data extractive business models.

Some of those effects, as outlined by Michael Salter, Professor of Criminology at UNSW are horrendous.

According to Salter, who is also Director of Childlight UNSW, the Australasian research hub of Childlight, the Global Child Safety Institute, there are about 3 million active offenders across the 10 most active online child abuse forums accessible on Tor – AKA The Onion Router and long regarded as the gateway to the so-called 'dark web.'

"Yet, when we object to this, we're told, I'm really sorry, but you know, Tor is a fundamental human rights tool, and yes, a bunch of children are going to be abused and their lives are going to be destroyed. But it's worth it for human rights."

He referenced the results of a survey conducted by an organisation he works with that demonstrated how pervasive the issue of online child abuse is even in Australia.

"We conducted a representative survey of 2,000 Australian men. We asked them about their sexual interest in children and ... between 4 to 6 per cent of Australian men said that they would have sexual contact with a child under the age of 12 if they knew that no one would find out. So that's a really significant market segment that in other more regulated industries like TV, movies, radio, and print, the private sector cannot profit, or they are really constrained in their capacity to profit from that market segment."

For now, however, that is not true of the technology sector which was given carte blanche in the early days of the web.

As Mi3 Fast News reported in August, the argument of digital platforms that they are utilities and not publishers, despite doing all the things publishers do, like aggregating audiences with content and then selling them advertising has come under pressure after the controversial law that protects Big Tech from liability for defamation and other offences, Section 230, was overturned by an American court. The decision is being appealed

Salter said the idea that the internet industry should be treated differently emerged from Silicon Valley and from the technology sector whose self-image was based on a notion that independence from the state meant "independence from state law, and independence from terrestrial law."

"And by and large, the United States government and others around the world agreed with that posture."

Salter said governments essentially agreed to suspend the normal regulatory obligations it impose on other sectors. "So this is a multi-billion dollar sector that enjoyed a lack of child protection and other obligations that have been in place elsewhere."

Per Salter, "We published the world's first global index on child sexual abuse and exploitation in March this year. We conducted a meta-analysis of online victimisation surveys. We found that about 12 per cent of children in the last 12 months have experienced the non-consensual taking or the sharing of images online. So that's about 300 million children per year who will come into online sexual harm."

The fact that about 4-6 per cent of men are motivated to perpetrate that harm, describes the breadth of the problem, he said.

Not binary

However, it is not as simple as a safety versus privacy dichotomy, according to O'Shea.

"In the United States, various terrible groups are organising to identify people who use abortion when in states where it's illegal, to then find and prosecute women who may engage in searches online about accessing abortion services."

People are being targeted with data bought from the data extractive industries and then they are victimised for accessing information even though it is legal for them to travel to have the procedure, she said.

"Another example of this kind of predatory behaviour is that of a Catholic priest in a community who was identified by a bunch of vigilantes for being on Grindr (a gay dating app) and who lost his job because he had a vow of celibacy."

All the data used to identify him was available commercially and purchased legally, she said.

"There's a huge privacy gains we could make by limiting data extractivism on all these business models which can make huge profits."

The industry has a problem, said O'Shea. "They're addicted to data, and there's all sorts of secondary consequences that are very harmful."

Those harms are not restricted to individuals but can affect society as a whole she said noting the data can be applied to algorithms recruiting people into extreme communities, "whether that is abuse of women, or toxic masculinity cultures that exist in profit online. For every one Andrew Tate, there are thousands and thousands of copycats who profit from the virality of their content."

In addition to privacy harms, she said, there are also safety harms.

"These business models work by incentivising extremist content to be published... we can prevent it by implementing good privacy reform (which is also a rights-respecting reform) that would stop at its source," per O'Shea.

"Limiting capacity to collect personal information at the source undermines that data extractive business model, and that's where we can make the most gain to improve the ecosystem overall."

Not mutually exclusive

Online Safety Commissioner Julie Inman Grant, agreed it is a false binary to pit privacy against online safety. "You also have to think of the gravity of potential harms at play."

And reacting to Salter's earlier comments on child sexual abuse she said that a child who has been tortured and abused "has a right not to be re-traumatised, and they have a right to dignity."

Inman Grant's view is that Australia has 'threaded the needle pretty well' with its current standards, which she said have only recently undergone scrutiny by the Human Rights Committee scrutiny.

She also noted work looking into the encryption standards of digital giants which demonstrated how both rights to privacy and to safety can be effectively balanced.

It is not her expectation that digital publishers will be told to hand over encryption keys, she said, "because it doesn't serve anyone's needs", but that it also does not absolve the digital platforms of responsibility.

"We want you [digital platforms] to provide us an alternate plan of action in terms of how you plan to deter and disrupt," she said.

Fair and reasonable

Privacy Commissioner Kind, meanwhile said, "It's very helpful to think about the history of the internet as growing out of an essentially anti-government movement to decentralise power, and to decentralise access to the creation of information". She referenced John Perry Barlow from the Electronic Frontier Foundation's Declaration of Independence of Cyberspace, in 1996 before wryly noting, "That certainly isn't what has evolved or emerged over time."

Kind noted that the key feature of what we see today is what she described as the monopolistic power of a few major players through the 'platformisation' of the internet. Left unsaid is that those players have a powerful vested commercial interest in resisting and limiting their freedom to make money through stronger regulation and legislation. Instead, she said, "We can't have this conversation without acknowledging that the presence of those handful of platforms really does define the problem space here, both in terms of how we protect privacy and how we protect safety."

Kind said there are tangible proposals in the Privacy Act overhaul such as a 'fair and reasonable' test that would in her words, start to reign in the worst excesses of the data-extracted digital environment that O'Shea referred to in her comments.

"There are a range of other proposals on the table [such as] targeted, advertising which are small steps towards a healthier, better or more secure online environment for everyone," said Kind.

She was more downbeat about another mooted government reform intended to protect children.

"I have less confidence that other proposals on the table currently, such as a social media ban, are perhaps going to achieve the same outcomes."

Others, such as high school principals on the front line, take a different view.