Social media ban: Cybersecurity honey pot concerns rise as tech sector gets an early glimpse into Fed's thinking and concerns around bias, privacy, data security and human rights

Could forcing every Australian social media user to age authenticate create a huge cybersecurity honey pot? Pic: Winnie the Pooh, A.A. Milne.

Technology service providers were given a briefing into an Age Authentication Technology Trial designed to support the Federal Government's proposed ban on social media access for users under 16. Allocating $6.5 million for this trial, officials hope to mitigate online harms such as bullying, stalking, and exposure to inappropriate content. However, critics warn implementing age verification measures creates significant cybersecurity risks and privacy concerns, potentially creating a "honey pot" for cyberhackers.

What you need to know

- Tech service providers have been given a glimpse into the Australian Federal Government's plans to implement a social media ban for children under 16 to minimise online harms, including bullying and mental health issues.

- A week after issuing a tender for a $6.5 million Age Assurance Technology Trial the Government held an online briefing for service providers.

- The government's goal is to minimise harm such as bullying, stalking, image abuse, access to illegal and restricted content, and a range of potential mental health issues associated with excessive digital activity.

- Critics, including the Australian Information Security Association, warn such age verification requirements could create cybersecurity risks and privacy concerns.

- Relying on the technical know-how of digital giants does not guarantee data security. In June it was revealed a major US identity verification company, AU10TIX left login credentials exposed online for more than a year, allowing access to sensitive personal information. TikTok and X both reportedly use the platform.

- The trial aims to evaluate age assurance technologies for both users over 18 and those aged 13-16.

- Research indicates that while parents are concerned about their children's online privacy, many feel they lack control over their children's data security.

- The Office of the Australian Information Commissioner emphasises the importance of protecting children's privacy rather than preventing their online engagement, and Privacy Commissioner Carly Kind has already expressed scepticism about the ban.

Children frequently spend time online to connect with friends, learn, and be entertained. It is unrealistic to keep kids off the internet in the 21st century. However, online services designed to appeal to young people may not always be safe, and appropriate and protect their privacy.

Technology service providers were given an early glimpse into the Federal Government's plans for a social media ban on children courtesy of an online industry briefing in mid-September.

That briefing was designed to provide some clarity on an Age Assurance Technology Trial tender issued by the Department of Infrastructure, Transport, Regional Development, Communications and the Arts on the 10th of September. The tender closed on October 8.

The Government allocated $6.5 million for trial which, according to a statement from the Department at the time, “is testing different implementation approaches to help inform policy design.”

The Federal Government has said it was to minimise online harm for children by banning them from social platforms before they are 16, to address issues such as bullying, stalking, and a range of potential mental health issues associated with excessive digital activity.

There are also serious issues of image abuse, and access to illegal and restricted content, as highlighted by a recent hedge fund report into Roblox, a site beloved by the age cohort the ban is targeting. As Mi3's Fast News revealed last month, the site was described as a "paedophile hellscape" by activist hedge fund Hindenburg Research.

According to the firm, "Our in-game research revealed an X-rated paedophile hellscape, exposing children to grooming, pornography, violent content, and extremely abusive speech."

Roblox disputed the Hindenburg report, saying it has stringent content moderation. That denial was at odds with the study which claimed it, "...found 38 Roblox groups – one with 103,000 members – openly soliciting sexual favours and trading child pornography."

National Cabinet

In a media statement last week, Prime Minister Anthony Albanese said, “First Ministers agreed to the Commonwealth legislating a minimum age of 16 to access social media. Setting the minimum age at 16 will protect young Australians from the harms that come with social media, and will support mums, dads and carers to keep their kids safe.”

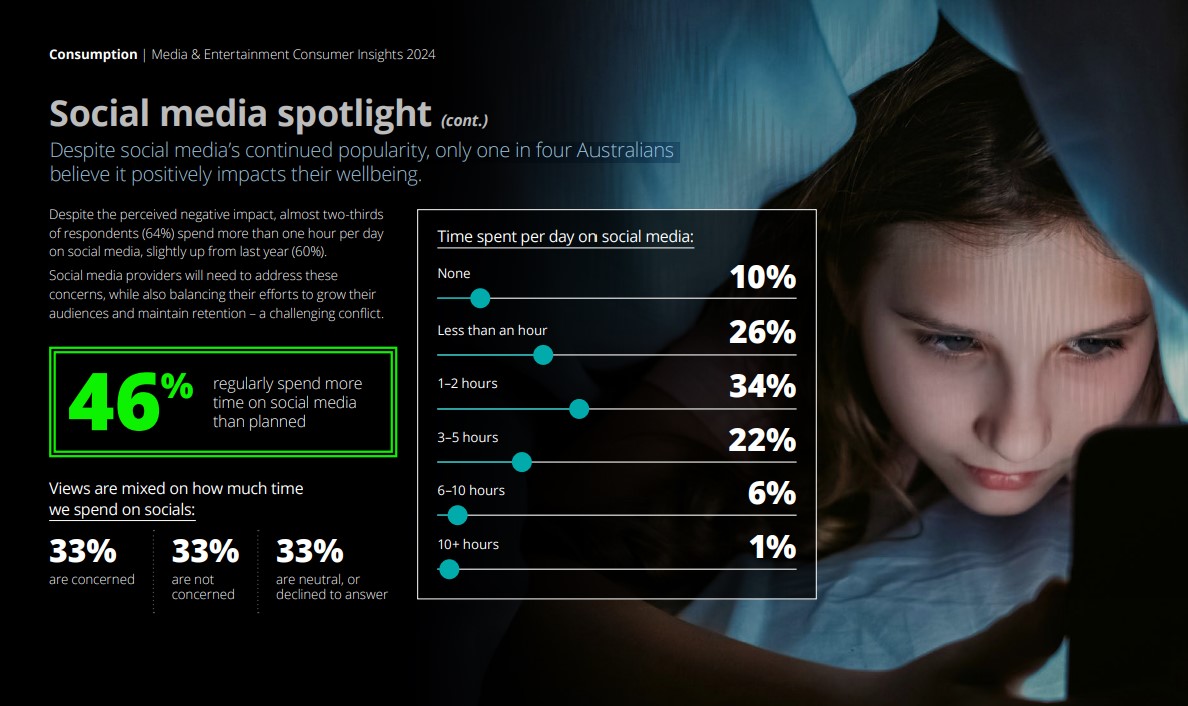

A plethora of studies indicate widespread community concern about social media. Most recently, Deloitte's Media & Entertainment Consumer Insights 2024 found that "despite social media’s continued popularity, only one in four Australians believe it positively impacts their wellbeing."

According to the report, "While social media remains incredibly popular, the time we spend on socials has stabilised amid a pivotal shift in consumption habits. Younger consumers in particular, appear increasingly mindful of how much of their day is spent scrolling feeds and are cutting back as a result."

Source: Deloitte's Media & Entertainment Consumer Insights 2024

Risky business

It’s a complex task, however, fraught with its own risks. In the submission to the Government’s inquiry into Social Media and Online Safety last year, the Office of the eSafety Commissioner noted while there is growing recognition of the need for robust age verification systems, the current landscape is fraught with challenges.

The inquiry highlighted that requiring all users to be identifiable or to provide substantial identity information to verify their age does not necessarily equate to improved safety. Instead, it risks significant unintended consequences, particularly for those who rely on a degree of anonymity for their safety and privacy online.

The submission outlined various age assurance tools, including age screening and age-gating techniques, which often rely on self-declaration. However, these methods can be easily circumvented, it said. For instance, children can simply enter false birthdates when creating accounts on social media platforms. Moreover, the submission emphasises age assurance measures should not only be about verifying age but also about ensuring that the platforms actively promote safe, age-appropriate experiences for children.

Age verification technology is not a one-size-fits-all solution, the submission says. It must be complemented by educational measures that empower children and their parents to navigate online spaces safely. The importance of building trust in these systems is paramount, as users need assurance that their data will be protected and used appropriately.

Cybersecurity honey pot

Banning teens from social media involves verifying everybody’s age, not just teens, and critics have raised the risk of serious cybersecurity and privacy harm.

According to the Australian Information Security Association (AISA), “Implementation of a social media ban for teens will mandate the use of age verification or age assurance technological solutions which require the collection of identity information.”

A statement issued by the association warns the legislation risks creating a honey pot for cyberhackers. “Implementation of a social media ban for teens will mandate the use of age verification or age assurance technological solutions which require the collection of identity information.”

And AISA cautions, such concerns are not hypothetical.

“In June this year, a major identity verification company, AU10TIX, in the United States left login credentials exposed online for more than a year, allowing access to sensitive personal information. Platforms reportedly using this company include TikTok and X," the group stated.

Age authentication trial

According to a summary of the tender on Tenders.gov.au, the successful Tenderer/s will conduct a technology trial independently through a standardised and replicable testing methodology against defined evaluation criteria.

"This includes assessing the stated technical capabilities for each available technology, live testing of each technology under replicable test conditions, and any other effective methodologies identified by the successful Tenderer/s," the tender reads.

The trial has two objectives;

- Firstly, to evaluate the maturity, effectiveness and readiness for use of available age assurance technologies that determine whether a user is 18 years of age or over, in order to permit the user to access age-restricted online content; and

- Secondly, to evaluate the maturity, effectiveness and readiness for the use of available age assurance technologies that determine the age of a user in the 13-16 years age band, in order to permit the user to create an account on a social media website or application.

According to a blog by Sydney-based engineering consultancy, Engineering Business, effectiveness will be measured against criteria including accuracy, interoperability, reliability, ease of use, potential bias, privacy protection, data security, and human rights protections.

In a blog published a week after the tender closed, Rebecca Brown, Director Digital Law Reform and Digital Platforms, wrote, “Children frequently spend time online to connect with friends, learn and be entertained. It is unrealistic to keep kids off the internet in the 21st century.”

“However," she noted, “Online services designed to appeal to young people may not always be safe, appropriate and protect their privacy. We know Australian parents are concerned about this; a survey we conducted last year found 85 per cent of parents believe children must be empowered to use the internet and online services, but their data privacy must be protected.”

That research, conducted a year ago, revealed 91 per cent of parents said the privacy of their child’s personal information is of high importance when deciding to provide their child with access to digital devices and services. Nearly 80 per cent described protecting their children’s data as a major concern.

However, only half said they felt in control of their children’s privacy, and a clear majority said they felt they had no choice but to sign their children up to a particular service. Most said they were unclear about how to protect their children’s information while using a service.

The OAIC is not responsible for the tender, but it is responsible for developing the Children’s Online Privacy Code.

In her blog, Brown wrote, “Our ultimate objective is not to prevent children from engaging in the digital world, but rather to protect them within it through strengthened privacy protections for the handling of their personal information.”

It’s not the first time an executive from the OAIC has expressed a view contrary to the Government’s position on social media.

Speaking on a SxSW panel last month, Privacy Commissioner Carly kind told attendees, "There are a range of other proposals on the table [such as] targeted, advertising which are small steps towards a healthier, better or more secure online environment for everyone… I have less confidence that other proposals on the table currently, such as a social media ban, are perhaps going to achieve the same outcomes.”